February 27, 2023

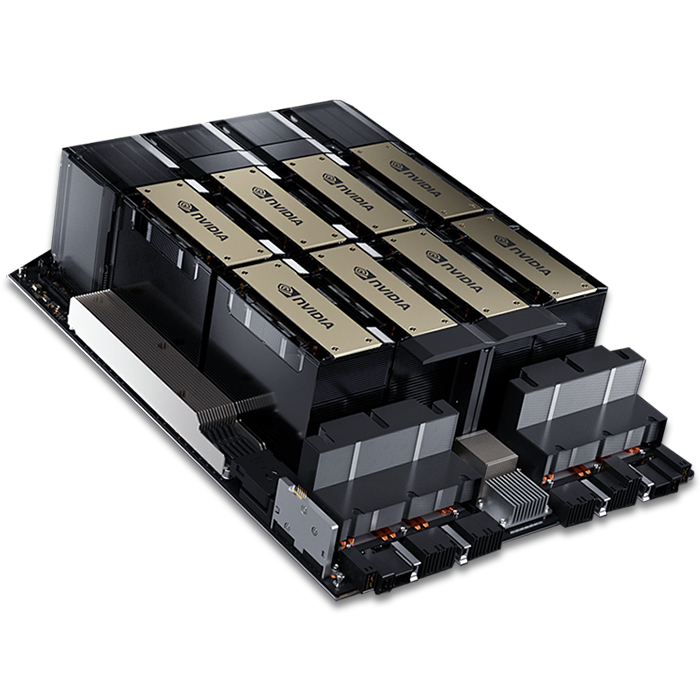

With NVIDIA being the leading player in the GPU market, it’s challenging to determine which NVIDIA GPU server is suitable for your organization. In this blog post, we compare the PCIe and SXM5 form factors for NVIDIA H100 GPUs, the highest-performing GPUs currently available, and contrast performance and costs to help you make an informed decision.