Explosive Demand for H100 GPUs

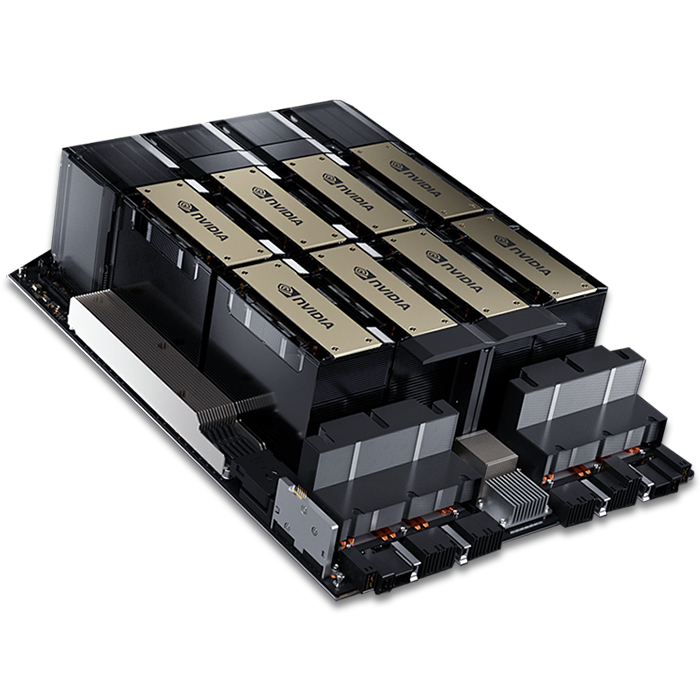

With the current boom in Generative AI, demand for enterprise graphics cards is at an all-time high, and NVIDIA is dominating the industry with an estimated market share of 90%. Accessing massive amounts of computing power to fuel training and inference has become a determining factor in how quickly AI products can be brought to market. No GPU model is in higher demand than NVIDIA's new H100 SXM5 Tensor Core GPU. Boasting impressive performance improvements over its predecessor, NVIDIA's A100 SXM4 GPU, the H100 is quickly becoming the most important asset in any company's HPC infrastructure.

The demand for NVIDIA H100s has drastically outpaced supply, with lead times for H100 nodes growing by the day. Arc's current lead time for H100 SXM5 nodes is 10-14 weeks, meanwhile, NVIDIA is quoting 6+ months. As the first H100 clusters continue to deploy in the coming months, many companies will be stuck with their NVIDIA V100 and A100 chips for longer than expected. These companies will face the challenge of continuously innovating while utilizing older, slower hardware than some competitors.

Saved by the Cloud?

Many companies affected by the chip shortage that cannot promptly add H100 GPUs into their on-premises infrastructure will look to the cloud to fill the gap. Unfortunately, accessing H100s in the cloud is much easier said than done. Many organizations have already started looking to the cloud to fill the void that delayed shipments have left in their compute reservoirs, only to find that the supply shortage has also hindered CSPs. These shortages have resulted in cloud providers requiring longer contracts, large cluster commitments, upfront payments, and delayed deliveries. These stringent requirements have reduced the market's competitiveness, as only companies with considerable capital can meet them. Sam Altman, the CEO of OpenAI, recently complained that a chip shortage was 'delaying' ChatGPT plans in a (now deleted) post. Luckily, ChatGPT has the backing of Microsoft. Through first-hand experience, it is evident that Microsoft used its weight to influence NVIDIA into reallocating paid-for orders designated to other organizations, satisfying OpenAI's GPU appetite.

So, what can you do if you can't influence H100 allocations?

Optimizing Your Current Hardware

GPU utilization is a massive issue across various industries utilizing HPC infrastructure, with average utilization rates of just 20-30%. Optimizing GPU utilization through fractionalization would drastically increase performance, reducing the compute times of an organization's accelerated hardware. This optimization would mitigate the need to acquire new H100s immediately. Implementing GPU fractionalization would enable better utilization rates by stacking tasks/jobs/workloads in the same GPU architecture.

Optimization by Fractionalization with ArcHPC

Reworking your organization's tech stack with GPU fractionalization can be achieved with a few different solutions available in the market (e.g. MIG, MPS, and vGPU). Unfortunately, these solutions vary in quality and can be difficult to implement, and they suffer from implicit synchronization issues. Synchronization issues occur when fractionalized workloads utilize the same CPU host, which sees the fractionalized workloads as one large job. When this is the case, a null execution line causes all workloads to be affected and synchronized, delaying task completion. ArcHPC, Arc's GPU optimizing software suite for enabling complete GPU utilization and improved performance, doesn't have this synchronization issue. ArcHPC solves implicit synchronization issues and enables task/job/workload matching on a deeper level than any other solution available. With ArcHPC fully integrated, you could halve your infrastructure needs by increasing GPU performance by 35-206%, reducing compute times drastically.

Conclusion

Securing the best GPU resources will be challenging as the AI industry continues its explosive growth. With prolonged supply shortages for NVIDIA H100 GPUs, organizations must fully optimize their current HPC infrastructure to remain competitive. GPU fractionalization is the best technique for increasing GPU utilization and performance, but existing solutions suffer from inherent synchronization issues that reduce efficiency. ArcHPC has resolved these issues and offers organizations a truly optimized GPU utilization and performance solution.