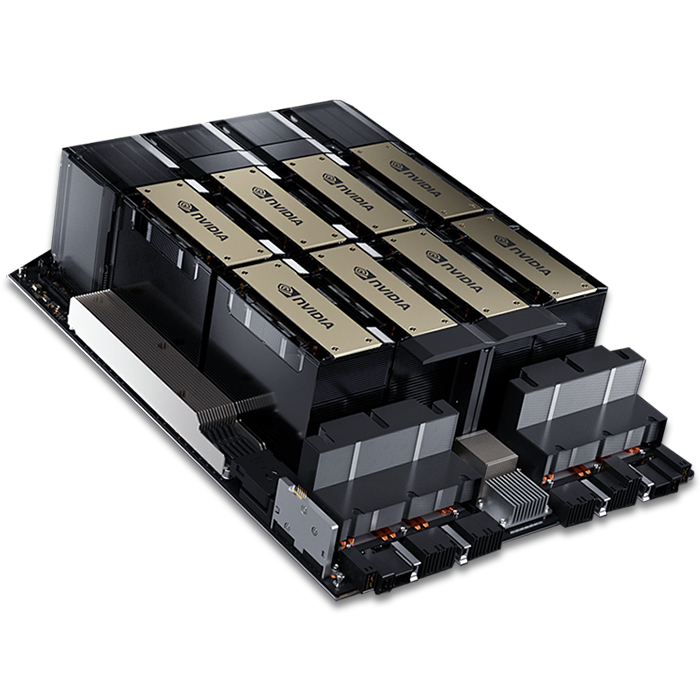

Significant breakthroughs have occurred within the field of artificial intelligence in the past year. As new AI solutions surface, a push for faster data processing and model training has gained momentum. Data center GPUs have become the backbone of high-performance computing in AI, enabling breakthroughs that were once deemed impossible. Yet, a significant challenge emerges as companies race to harness their power: unoptimized and over-provisioned GPU resources. This post explores the intricate balance between leveraging the power of GPUs and optimizing their usage to ensure efficiency, sustainability, and cost-effectiveness.

The Cost of Over-Provisioning

The practice of allocating more GPU resources than necessary is a common yet costly misstep for many organizations. This approach leads to wasted computational capacity, inflated expenses, and a larger carbon footprint, undermining financial health and environmental sustainability. The additional management complexity of these resources also diverts the focus of IT and engineering teams from innovation to maintenance, slowing progress and reducing operational agility.

A common occurrence when over-provisioning GPU resources is the underutilization of onboard memory, especially the L2 cache. Research conducted by Arc Compute found that, when training with NVIDIA's most optimized library, CuBLAS, a GPU that only reaches 95% L2 cache utilization, underperforms by 160-176%. In this case, the GPU only achieved 57-63% of its capabilities and could have completed additional tasks concurrently. These results highlight a significant missed opportunity.

The Imperative of GPU Optimization

In the context of AI, where data volumes and computational needs are ever-expanding, optimizing GPU resources is not merely a technical task but a strategic necessity. Efficient GPU utilization brings many benefits, including reduced operational costs, enhanced processing speeds, and a minimized environmental impact. By fully harnessing the computational power of GPUs, companies can accelerate AI model development and deployment, driving innovation at a faster pace and with more significant impact.

Understanding the Execution Model and Warp Stalls

A foundational aspect of GPU optimization involves a deep dive into the GPU execution model, specifically addressing the concept of warps and the detrimental effect of warp stalls. Warps, which are groups of threads executing instructions in unison, can experience stalls due to various factors such as memory latency, data dependency issues, or control flow divergence. These stalls can significantly hamper GPU efficiency, leading to underutilization of resources and increased processing times.

Strategies for Optimization

The following strategies are essential for addressing these challenges and achieving optimal GPU performance:

- Profiling and Benchmarking: Leveraging tools to analyze application performance is crucial for identifying warp stalls and other bottlenecks. Profiling provides insights into how to mitigate these issues by optimizing code execution.

- Algorithm Efficiency: Optimizing algorithms to better align with GPU architecture can significantly reduce warp stalls. Techniques like memory coalescing, minimizing control flow divergence, and leveraging shared memory are crucial to enhancing GPU performance.

- Dynamic Resource Allocation: Implementing systems that dynamically adjust GPU resource allocation based on real-time demand ensures that GPUs are fully utilized when needed and conserved when not, preventing over-provisioning.

- Code Optimization: Refactoring code to address identified bottlenecks, such as inefficient memory access patterns or unnecessary data transfers, can significantly improve performance and reduce the likelihood of warp stalls.

Case Studies and Practical Applications

Numerous AI industry case studies demonstrate GPU optimization's real-world impact. Companies that have successfully optimized their GPU usage report significant reductions in computational time and operational costs, alongside notable improvements in model performance and scalability. These successes underscore the tangible benefits of a strategic approach to GPU resource management, emphasizing the importance of continuous monitoring, profiling, and optimization to stay ahead in the competitive AI landscape.

As AI continues to drive technological advancement and transformation, optimizing GPU resources emerges as a critical factor for success. By addressing the challenges of over-provisioning and warp stalls through strategic optimization efforts, AI companies can enhance efficiency, reduce costs, and contribute to environmental sustainability. The journey towards optimized GPU computing is complex but rewarding, offering a path to more significant innovation, competitiveness, and operational excellence in AI.