The unveiling of NVIDIA's Blackwell architecture at GTC 24 has sent waves of excitement through the tech community. As pioneers in the field, we at Arc Compute are eager to explore this transformative technology, shedding light on its profound implications for the future of computing.

.jpg)

NVIDIA Blackwell Architecture: A Closer Look

NVIDIA's Blackwell architecture introduces a paradigm shift in GPU design, meticulously crafted to meet the advanced requirements of AI and HPC applications. Let's delve into the core features that distinguish Blackwell:

5th Generation Tensor Cores:

At the forefront of Blackwell's innovation are the 5th generation Tensor Cores, engineered to elevate AI computations to new heights. These cores are pivotal in achieving significant advancements in performance and efficiency, essential for the rapid development and deployment of AI models. NVIDIA has added new precision formats, stating that "as generative AI models explode in size and complexity, it's critical to improve training and inference performance. To meet these compute needs, Blackwell Tensor Cores support new quantization formats and precisions, including community-defined microscaling formats".

192 GB of HBM3e Memory:

Blackwell's exceptional 192 GB HBM3e memory, with an unparalleled memory bandwidth of up to 8 TB/s, stands out as a key feature. This vast memory capacity and impressive speed are instrumental for data-intensive applications, ensuring that even the most complex AI models and HPC simulations can execute efficiently.

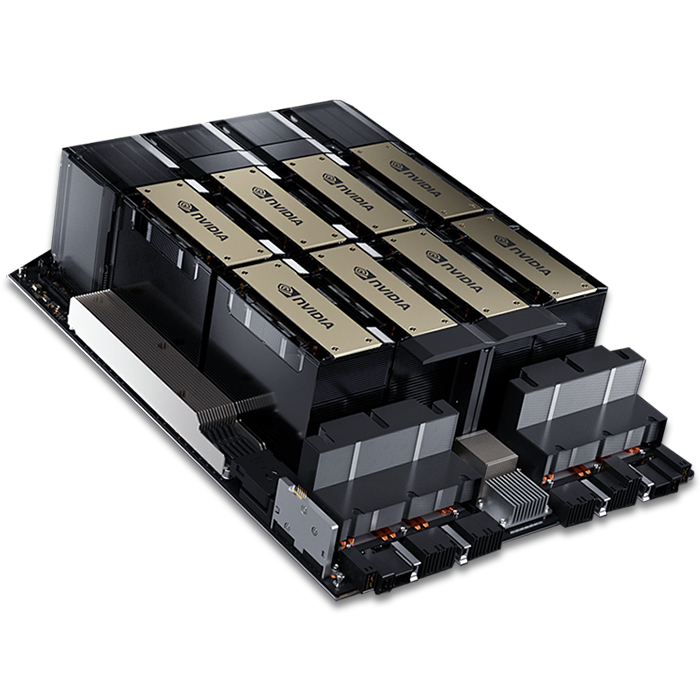

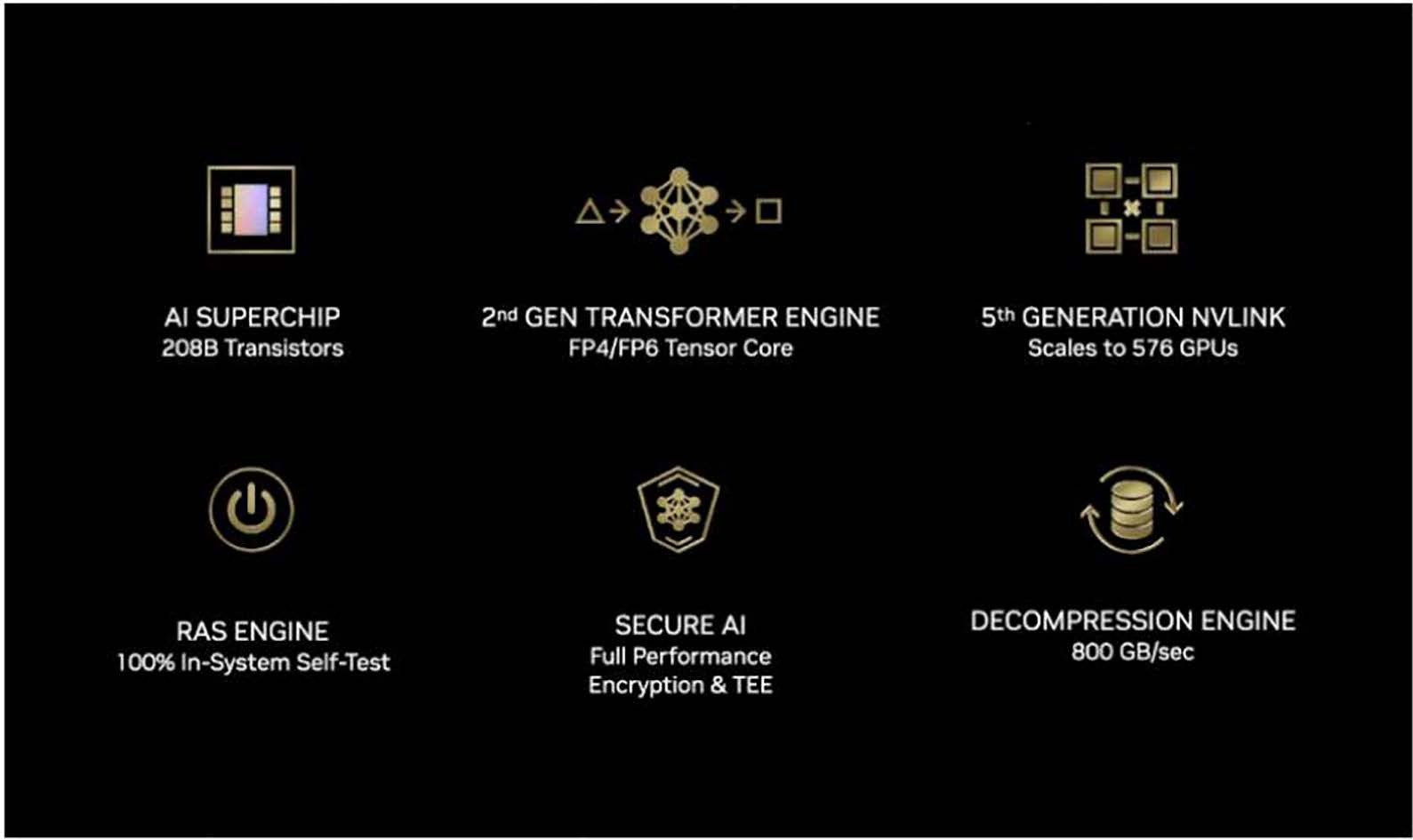

208 Billion Transistors and Dual-Die Design:

Blackwell GPUs are built with an astonishing 208 billion transistors, using a custom-built TSMC 4NP process. The architecture features two reticle-limited dies connected by a high-speed, 10 TB/s chip-to-chip interconnect. This dual-die design doubles the computational resources within a single GPU, enhancing data processing and overall performance .

Second-Generation Transformer Engine:

The second-generation Transformer Engine is at the core of Blackwell's AI prowess. It leverages custom Blackwell Tensor Core technology alongside NVIDIA® TensorRT™-LLM and NeMo™ Framework innovations, dramatically accelerating inference and training for large language models and Mixture-of-Experts (MoE) models. Introducing new precision models and micro-tensor scaling techniques enables 4-bit floating point (FP4) AI, optimizing performance and accuracy while doubling the size and performance of next-generation models.

Fifth-Generation NVLink:

Blackwell introduces the fifth iteration of NVIDIA NVLink®, offering a groundbreaking 1.8TB/s bidirectional throughput per GPU. This innovation enables seamless, high-speed communication among up to 576 GPUs, which is crucial for efficiently managing complex LLMs.

Advanced RAS and Secure AI Capabilities:

Blackwell-powered GPUs include a dedicated RAS (reliability, availability, and serviceability) engine, which employs AI for preventative maintenance, diagnostics, and forecasting reliability issues. Additionally, Blackwell features advanced confidential computing capabilities to protect AI models and customer data, which is crucial for industries requiring high levels of data privacy.

Arc Compute's Vision with Blackwell:

At Arc Compute, we're not just observers of technological innovation but active participants in shaping the future. NVIDIA's Blackwell architecture, with its dual-die design and extensive memory capabilities, resonates with our mission to break new ground in technology. These features are not just incremental improvements; they represent a leap forward in computational capability.

Our excitement about leveraging Blackwell extends beyond its technical specifications. It's about the potential to unlock new possibilities in AI and HPC applications, from more accurate predictive models in healthcare to complex climate simulations. The B100 GPU's capabilities align perfectly with our ambition to provide our clients with more power and smarter, more sustainable computing solutions.

ArcHPC and Blackwell:

We are enthusiastic about integrating our ArcHPC software with Blackwell's advanced capabilities. ArcHPC is an advanced GPU management solution that maximizes user and task density, optimizing GPU utilization and performance across HPC environments. By harnessing Blackwell's innovative features, we anticipate unparalleled improvements in performance and utilization, ensuring that our clients can achieve their ambitious computational goals with greater speed, accuracy, and efficiency.

Conclusion:

NVIDIA's Blackwell architecture and the B100 GPU are more than just milestones in NVIDIA's journey toward innovation; they're catalysts for change across the tech landscape. As industries gear up to establish their AI infrastructures, Blackwell's cutting-edge features promise to set new computational performance and efficiency benchmarks. At Arc Compute, our anticipation goes beyond adopting this technology; we're poised to integrate Blackwell's advancements into our solutions, propelling our clients and the broader industry toward the next frontier of AI and HPC achievements.

View the NVIDIA Blackwell Architecture Technical Brief